Introduced in MySQL Shell 8.0.21, the new MySQL Shell Dump and Load utilities has as its main goal to minimize the time needed to create and restore logical dumps of large data sets.

Through heavy parallelization and other techniques, we were able to reduce the time needed for these tasks by an order of magnitude compared to previous dump utilities.

In fact, we’ve observed dump speeds close to 3 GB/s and loads at over 200MB/s in benchmarks using freely available large datasets. Read more about that and performance comparisons with other similar tools in part 2 of this series.

In this article, I’ll describe our approach to achieve that, focusing on the loader side. You can read about what was done in the dumper, which has even bigger performance gains than the loader, in part 4.

This is part 3 of the blog post series about MySQL Shell Dump & Load:

- MySQL Shell Dump & Load part 1: Demo!

- MySQL Shell Dump & Load part 2: Benchmarks

- MySQL Shell Dump & Load part 4: Dump Instance & Schemas

Disabling InnoDB Redo Log

In many cases, the bottleneck during data loading is the InnoDB Redo Log or binary log. During a logical load, the binary log is often disabled (loadDump() has skipBinlog: true). And it is now possible to also disable the InnoDB Redo Log in MySQL Server 8.0.21.

With ALTER INSTANCE DISABLE INNODB REDO_LOG, both the redo log and doublewrite can be disabled, which improves the throughput and reduces the write multiplication on disk. Note that MySQL Shell loadDump() does not disable the redo log and this has to be manually performed prior to loading the data.

Parallel Dump and Load

The key to getting data out and back into MySQL as fast as possible is to distribute work between multiple parallel sessions/threads.

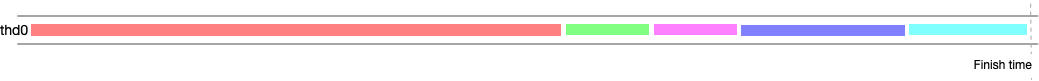

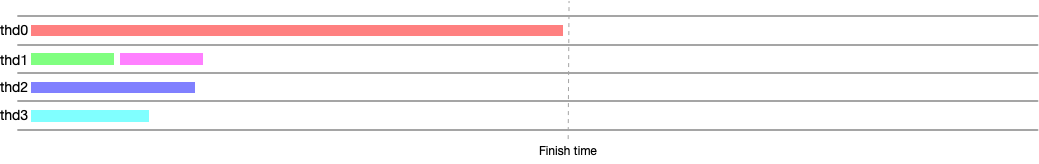

That’s probably not news to most people. And indeed, mysqlpump already does that, but it’s limited in granularity to one thread per table (for dumping data only, loading is single threaded). That works well if most of your tables have about the same size. But that’s rarely the case and you often end up with a single thread dumping 1 or 2 huge tables while all other threads are finished and idle. It also dumps to a single SQL file, which creates a serialization point. The single SQL file also makes it harder to parallelize loading all that data, since one would have to parse the file, besides finding suitable boundaries within the script to divide work between threads.

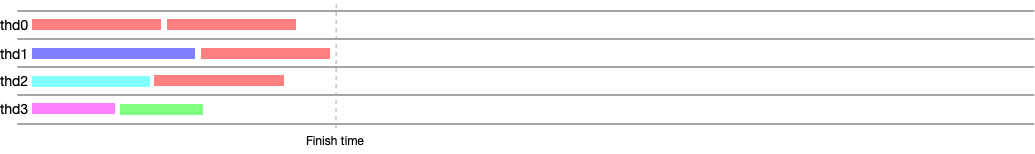

The Shell uses a more aggressive approach by chunking tables into smaller parts during dumping, which are stored in separate files. That way, we can parallelize even when working on a single table and loading doesn’t need to worry about splitting files.

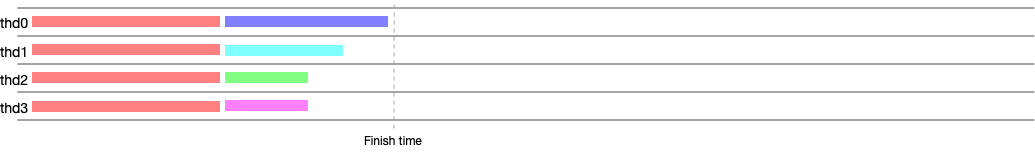

mydumper also breaks down tables into smaller chunks, but it will load chunks of the same table all at once. Although faster than loading a whole table in a single thread, that doesn’t scale as well as our approach which tries to maximize ingestion rate by carefully scheduling chunks, as explained later in this post.

In the graphs below, we represent the differences in efficiency of each approach:

Other notable features MySQL Shell has:

- The dump and load steps themselves can also be done concurrently. You can start loading a dump even while it’s still executing. Use cases that involve copying a database across servers can be sped up by taking advantage of that.

- Both dump and load have built-in support for directly storing to and loading from OCI Object Storage buckets.

- Table data is compressed by default using zstd, which not only reduces I/O or network traffic, but effectively bypasses I/O bottlenecks while keeping CPU usage relatively low. zstd is a lot faster at compressing and decompressing than gzip/zlib, at the cost of just slightly less compression.

- The Shell also helps saving time by supporting resuming of interrupted loads. Load progress is tracked externally so that you can retry large loads from where they left, instead of restarting from scratch.

Dump Format

Unlike dumps produced by mysqldump, mysqlpump, the Shell dumper writes DDL, data and metadata to separate files. Tables are also sub-divided into chunks and written into multiple CSV-like files.

This may have some disadvantages, since dumps are no longer a single file that can be conveniently copied around. However, there are several advantages:

- Loading dumps is no longer an all-or-nothing process. Because DDL scripts, data and metadata is written to separate files, we can selectively load only what we want from the dump, instead of being limited to loading everything that was dumped, as is.

- It’s easier to apply filtering and transformations to both the schema and data before loading them.

- Resuming interrupted loads is simpler, since we can keep track of what has been loaded already and skip them when retrying.

- Table data is dumped in a format suitable for

LOAD DATA LOCAL INFILEinstead of plain SQLINSERTstatements. The reduced amount of parsing alone should mean performance is at least a little better, even with single threaded loading. - Because tables already come pre-partitioned in separate files, loading tables in parallel is a lot easier and faster. The importTable shell utility already supports loading single-file CSV table dumps in parallel, but it has to scan the file to find chunk boundaries before loading them, which can take some time.

Maximizing Ingestion Rate

To maximize MySQL load performance it’s not enough to parallelize work at the client side. We also need to help the MySQL server, by shaping and ordering work in an optimal way, allowing it to ingest data as fast as it can.

For that, the loader does the following:

- Load larger tables/chunks first. This has the effect of balancing the total workload across all threads. The last thing we want is for smaller tables to all finish quickly and have that one huge table taking hours to be loaded, with threads sitting idle.

- Prioritize concurrent loading of different tables. If at any point, we can choose between loading chunks of the same table or loading chunks of different tables, we prefer the latter. The overall throughput we can get by using 4 threads to load 4 different tables is higher than what we’d get by loading chunks of the same table. This is because the server side threads for each of the 4 loading sessions would have to compete for the same locks at the storage engine level.

- Prioritize loading chunks of larger tables. If there are more threads than tables left to be loaded, we schedule chunks to threads proportionally to the amount of data remaining in their respective tables, while still making sure that at least one chunk of each remaining table is being loaded. This way, we will be maximizing both the overall throughput and the individual throughput of the larger tables, trying to get the whole thing finished sooner, at about the same time.

- Dynamic scheduling. Every time a thread is done loading a chunk, we compute the best chunk to load next, ensuring that the scheduling remains close to ideal regardless of how long it takes to load each chunk because of indexes, chunk size, row size etc.

- Pre-sort rows. It’s known that InnoDB works best when rows are inserted in Primary Key value order. But that is already taken care of by the dumper, since it queries and writes rows in order. The sorting may make the dump query take a little bit longer but leaves the data ready for loading.

To Defer or Not Defer (Indexes)

A common practice for loading tables faster is deferring the creation of secondary indexes. That is, strip secondary indexes when creating the table, load the data and only then create indexes.

In our tests, we’ve found that with one exception, deferring table indexes generally doesn’t help much and can even be counter-productive. Whether deferring indexes helps or not depends on your specific case, so we recommend experimenting with the deferTableIndexes option.

One benefit of setting deferTableIndexes to all is that the secondary indexes will be less fragemented, potentially taking up less disk space.

However, we did see consistent improvements in load times when deferring full-text indexes. Because of that, deferTableIndexes defaults to fulltext. Setting it to all would defer all indexes from all tables.

Conclusion

By redesigning logical dumps from the ground-up, we were able to obtain significant performance improvements compared to previous tools. In many cases, dumps that used to take hours or even a whole day, in even the most powerful hardware, can now be done in less than an hour, if not minutes.

Equally as important, loading these dumps to restore a server is also much faster. Restoring large databases from a Shell dump can take a fraction of the time compared to loading equivalent .sql dump files. That should free up some valuable time when an emergency restore is needed!

We hope you’ll try our new utilities and find them to be not only fast, but also capable and easy to use.