The MySQL family has grown with the introduction of the Router, which brings high-availability and Fabric integration to all MySQL clients independently of any specific connector support for them. This blog focuses on the throughput of the Connection Routing plugin for Router and evaluates the overhead it may bring to application performance compared to direct connection.

For those in a hurry, here are the main findings:

- While the performance overhead depends significantly on the workload used, our benchmarks show that Sysbench RO and RW, with the Router co-located with the client, the maximum throughput is close to direct connection.

- At the same number of threads the Router throughput is mostly within 10% of direct connection, while the maximum throughput is achieved at a bit higher thread count on the Router, as more threads help hide the latency;

- The average transaction latency increases a bit but, unexpected as it may be, on some configurations 95% of the transactions have similar, or even have lower, latency on the Router configurations that we tested.

1. About the performance of the Connection Routing plugin

The Connection Routing plugin mediates the connections between clients and servers with an extra layer of control. In essence, it forwards the MySQL requests from a set of clients to a set of servers, taking into account the availability of those servers and, optionally, the topology information obtained from the Fabric cache plugin.

The connection router forwards the requests as they arrive from the clients and servers and the data stream is not processed to provide any additional services. The Connection Routing, without any optimizations on that data stream, it is not expected to improve performance beyond what can be achieved with direct connection.

The Router flexibility comes at the price of an extra jump in the network, but the effective cost is not straightforward to predict. There are three main ways in which the Connection Routing plugin can interfere with the performance are:

- The throughput between master and slaves may be reduced;

- The transaction latency can increase;

- The connection establishment can take longer.

The next section presents some benchmarks that try to evaluate that overhead.

2. Benchmarking the Router

The benchmarks consisted on executed four workloads from the Sysbench OLTP suite: Read-Write, Read-only, Write-only (RW without any read operations) and Point-Select. These were executed directly between two servers – direct connection – and through the router that was co-located with the client – Router.

The results and the main findings in each of these tests is presented in the following sections.

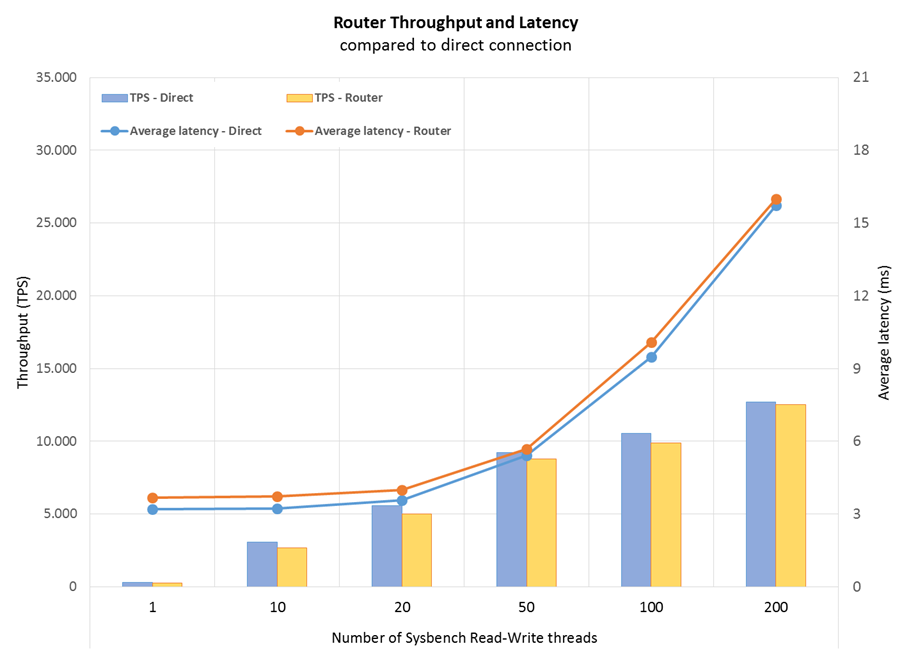

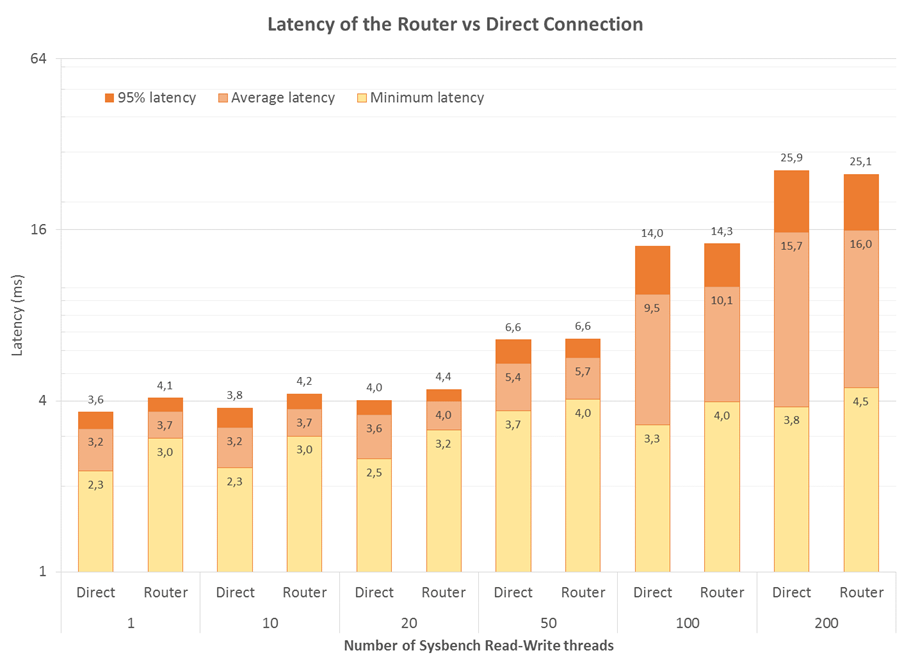

2.1 Sysbench RW

Some observations:

- The throughput of Sysbench RW going through the Connection Routing is able to reach less than 2% of the throughput of direct connection with 200 client threads, after 20 threads we are already within 10% of direct connection;

- The average latency is also able to reach less than 2% overhead compared to direct connection;

- The latency of 95% of the connection is, in fact, lower with the router than with direct connection on many thread combinations, which we think results from the connections keeping the order by which the connections are received from the clients.

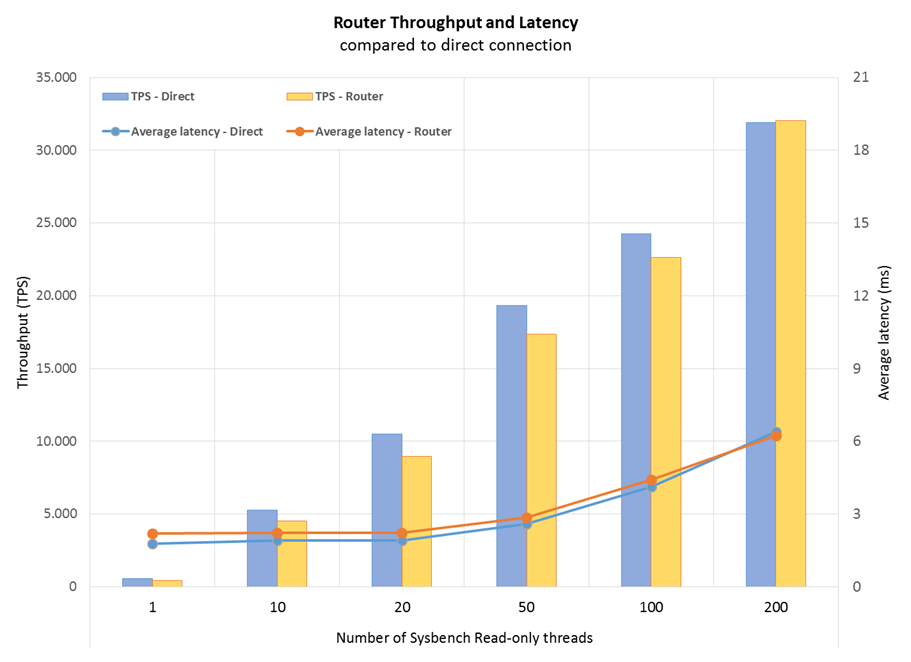

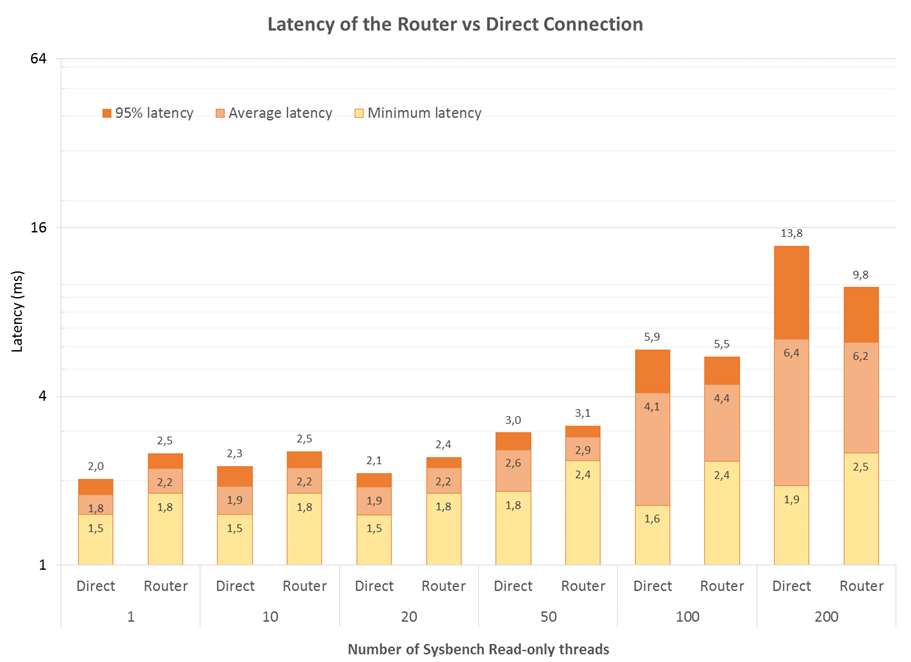

2.2 Sysbench Read-only

Some observations:

- The throughput in Sysbench RO is able to reach that of direct connection, although it takes more threads to reach parity than in Sysbench RW;

- The average latency is even able to surpass the direct connection when 200 threads are used, and with 95% of the transactions that effect is even more clear.

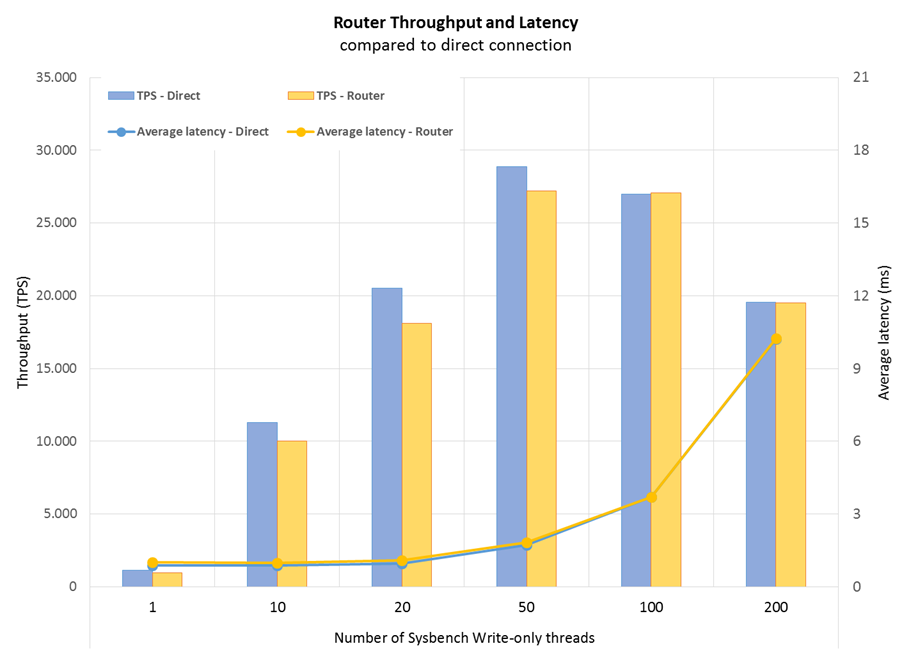

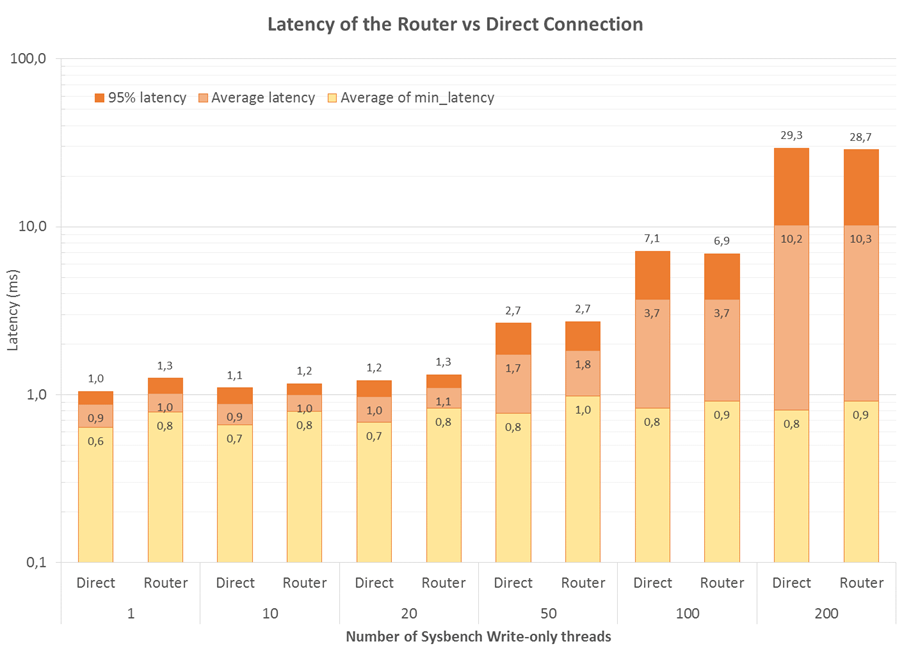

2.3 Sysbench Write-only

Some observations:

- The throughput is able to reach than 5% of the throughput of direct connection;

- As a write-only workload the latency is lower than for Sysbench RW and RO, but, nevertheless, the difference between the direct connection and the Router is small, mainly with 95% latency.

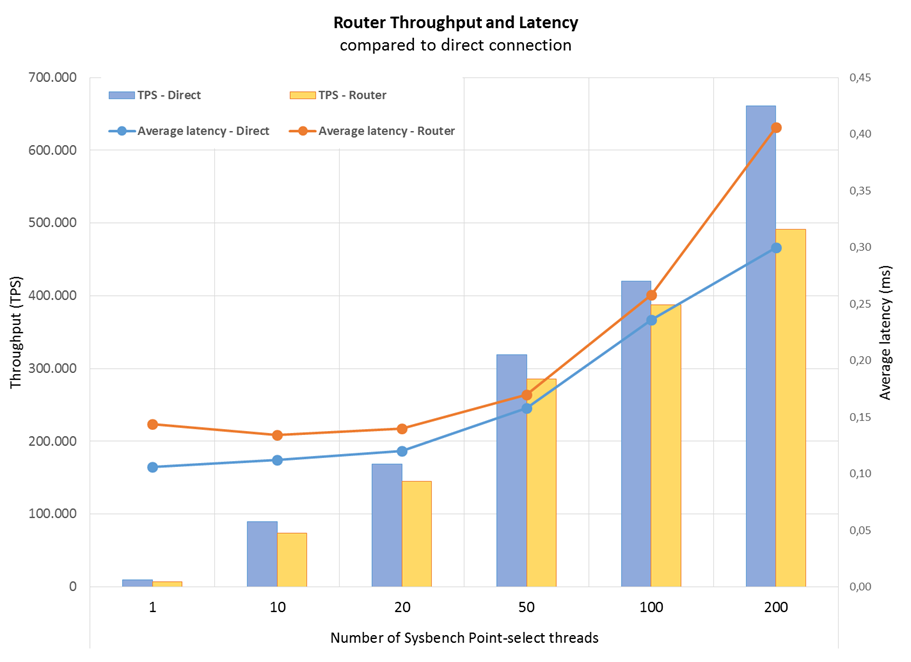

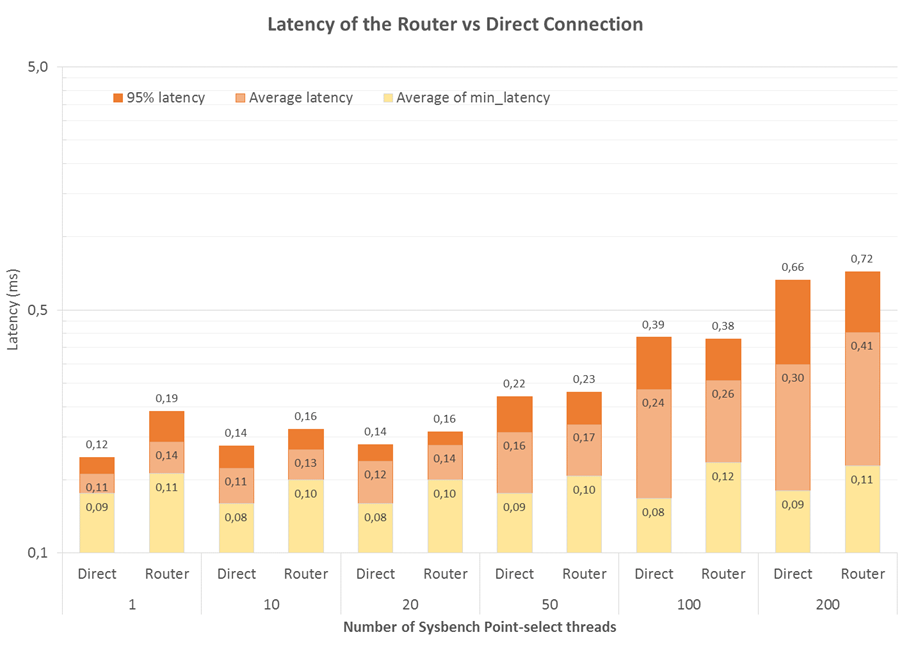

2.4 Sysbench Point-Select

Some observations:

- At 100 threads the Router it’s able to reach over 90% of direct connection, but while direct connection is able to to increase almost 60% in throughput going to 200 threads the Router is not able to increase more than 25%;

- The average latency is also barely below 10% at that configuration, but then it diverges significantly;

- So, the Connection Routing is showing higher overhead here, mainly because the transactions are very small and the transaction rate is very high.

- We suspect this is also to the fact that the Router is co-located with the client, so with many threads and scheduling operations it becomes harder to keep the pace.

3. Final considerations

The effectiveness of the Connection Routing is expected to depend on the workload:

- If the transactions are not small and the connections are mostly persistent the overhead of the Connection Routing should be very small.

- If transactions are small or the transaction rate is very high we may see some overhead and a reduced maximum rate;

- If the transactions are very small and/or the connections have to be re-established at each connection the overhead may be more significant.

But on our testing the Connection Router is mostly able to keep up, within 10% of throughput and latency, with the client workload on the Sysbench RW, RO and WO, particularly when the number of threads is sufficiently high to allow the latency to be hidden. When the workload has many small transactions, as when the workload is low on concurrency, the overhead is larger – although this is something we can improve on a bit.

In the end, it’s good to see the newly arrived Router already behaving like a champ!

Enjoy!