The MySQL Development Team is thrilled to announce a new 8.0 Maintenance Release of MySQL Shell AdminAPI – 8.0.19!

This release sets the bar higher, by introducing a new integrated solution based on a very well known Replication technology – MySQL InnoDB ReplicaSet!

On top of that, it sets the base for a new set of MySQL Router related features and brings major quality improvements.

MySQL Shell AdminAPI

This release focused on three main feature-sets:

- MySQL InnoDB ReplicaSet: The brand new integrated solution using MySQL Replication.

- New Metadata schema version and deployments upgrade: To support InnoDB ReplicaSet and to improve its capabilities, the Metadata schema version was upgraded. To support upgrading older deployments to the latest version using the new Schema, new functions were introduced to guide and perform the required actions.

- MySQL Router Management: Being a core component of InnoDB Cluster/ReplicaSet, the AdminAPI was extended to set the base for Router management capabilities.

And as always, quality has been greatly improved with many bugs fixed!

This blog post won’t go into details about MySQL InnoDB ReplicaSet nor the new Metadata schema version and analogous upgrades. Check the previous blog post for an introduction of ReplicaSet and stay tuned for the upcoming blog post introducing the new Metadata schema!

MySQL Router Management

The AdminAPI’s spotlight was turned to MySQL Router, another core component of InnoDB Cluster/ReplicaSet. This release introduces the first Router management and monitoring commands into the AdminAPI.

List Routers

MySQL Router can be deployed in several locations depending on the system’s architecture, however, the recommendation is that the deployment is done on the same host as the application that uses it.

To improve the usability and observability capabilities of InnoDB Cluster/ReplicaSet, we introduced a new feature that allows users to obtain information about the cluster’s/replicaset’s Router instances within a single command call:

<Cluster>.listRouters()<ReplicaSet>.listRouters()

The command provides information about:

- Router name

- Last check-in timestamp (periodic check-in stored in the Metadata)

- Hostname on which the Router is running on

- RO/RW end classic ports on which Router is listening

- RO/RW end X-protocol ports on which Router is listening

- Version

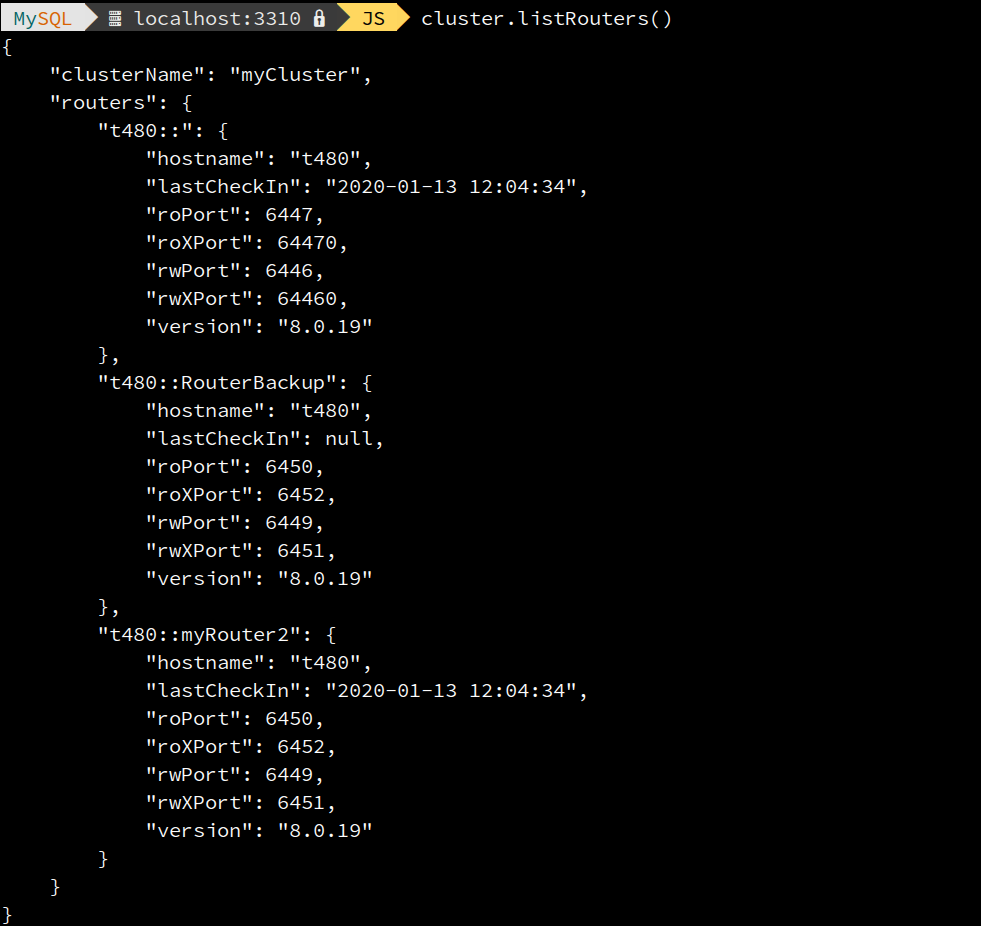

The following screenshot shows an example of the command’s output for an InnoDB cluster with three Router instances:

Remove Router from Metadata

InnoDB cluster is very flexible regarding the usage of MySQL Router, i.e. it’s possible to add and remove Router instances from a Cluster setup without any further intervention. However, considering that MySQL Router registers itself on the Metadata schema at bootstrap time, the registry can grow exponentially and include instances that are no longer at use.

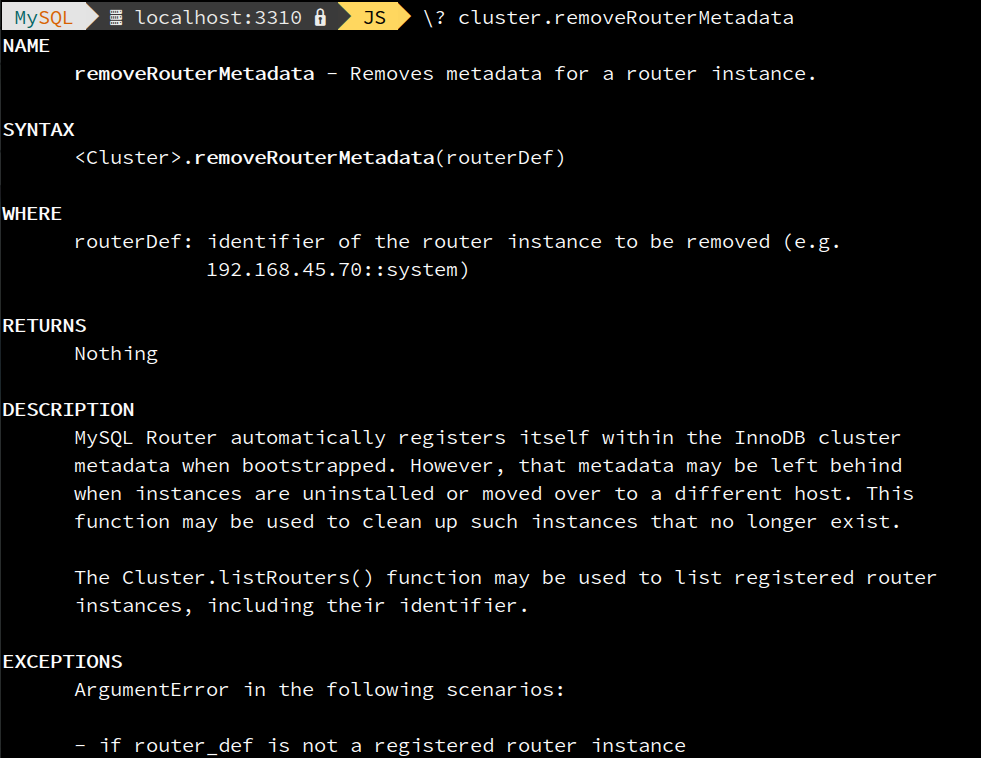

For that reason, whenever a Router instance is no longer at used (removed, uninstalled, etc.), it’s now possible to remove its registry from the Metadata using a single command call:

<Cluster>.removeRouterMetadata()<ReplicaSet>.removeRouterMetadata()

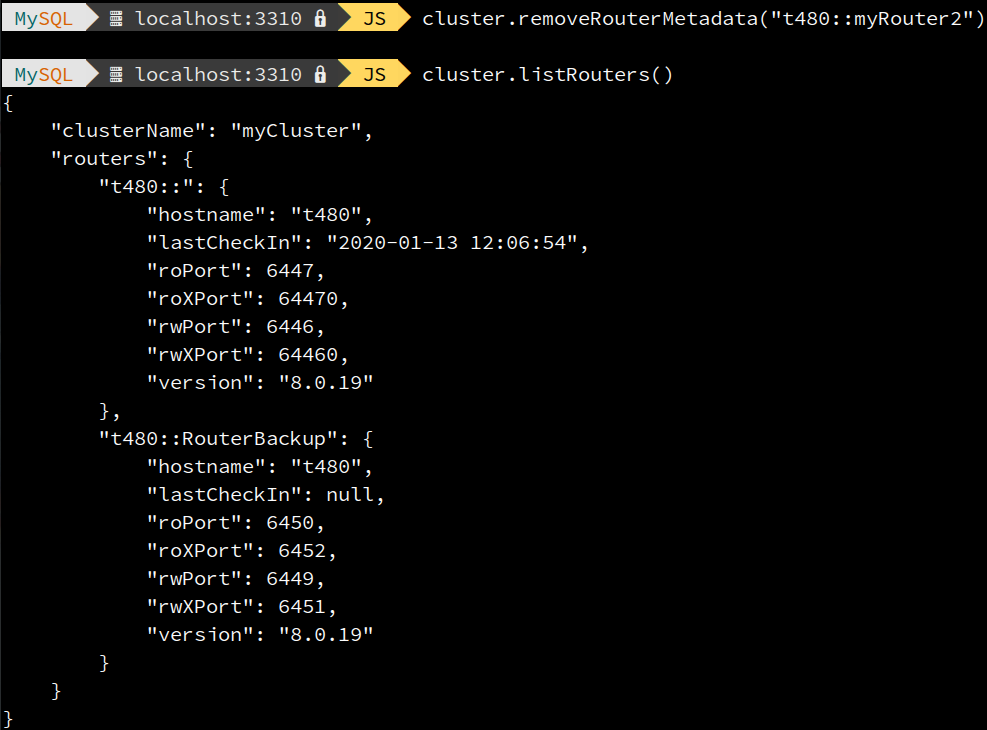

The following screenshot shows an example of removing one of the Routers’s metadata, myRouter2, and then checking the list of registered Routers to confirm the success of the operation:

Notable Bugs fixed

For the full list of bugs fixed in this release, please consult the changelog. However, there are some that deserve being mentioned.

IPv6 related bugs: BUG#30354273 and BUG#30548843

Although MySQL Shell 8.0.18 added support for IPv6, using an InnoDB cluster which consisted of MySQL Shell version 8.0.18 and MySQL Router 8.0.18 with IPv6 addresses was

not possible.

This has been fixed in this release, by fixing BUG#30354273 and adding support for IPv6 addresses stored in the Metadata.

Also, combining MySQL Shell version 8.0.18 with Router 8.0.19 in a cluster which uses X Protocol connections, resulted in AdminAPI storing mysqlX IPv6 values incorrectly in the metadata, causing Router to fail. (BUG#30548843).

BUG#30405569 – BAD USABILITY IN DBA.CREATECLUSTER() WHEN MYSQLD PORT > 6553

When creating a cluster in a target instance that is running on a port > 6553, resulting in the automatically calculated localAddress port being invalid, the AdminAPI error message was meaningless and did not indicate what has exactly happened and what could/should be done.

This behavior was improved by introducing new informative messages indicating when localAddress was automatically generated and which values are being used.

BUG#30281908 – MYSQL SHELL CRASHED WHILE ADDING A INSTANCE TO INNODB CLUSTER

When using MySQL Clone as the recovery method, trying to add an instance that did not support RESTART to a cluster caused MySQL Shell to stop unexpectedly. Now, in such a

situation a message explains that Cluster.rescan() must be used to ensure the instance is added to the metadata.

BUG#29705983 / BUG#95191 – CLUSTER.STATUS: GET_UINT(24): FIELD VALUE OUT OF THE ALLOWED RANGE (ARGUMENTERRO

The Cluster.status() operation could report an error “get_uint(24): field value out of the allowed range” because it was always expecting a positive value for some fields that could in fact have negative values. For example, this could happen when the clocks of different instances were offset.

BUG#30545872 – CONFLICTING TRANSACTION SETS FOLLOWING COMPLETE OUTAGE OF INNODB CLUSTER

After a complete outage, when an instance restarted, it could have super_read_only disabled. That could lead to writes being performed in that instance resulting in a conflicting transaction set in regards to the other cluster members. dba.rebootClusterFromCompleteOutage() would then fail due to the conflicting transaction set.

This has been fixed by ensuring super_read_only is enabled and persisted during the process of adding instances to a cluster.

BUG#30394258 – CLUSTER.ADD_INSTANCE: THE OPTION GROUP_REPLICATION_CONSISTENCY CANNOT BE USED

The AdminAPI ensures that all members of a cluster have the same consistency level as configured at cluster creation time. However, when high and non-default consistency levels were chosen for the cluster, adding instances to it resulted in an error 3796 indicating that group_replication_consistency cannot be used on the target instance.

This happened because high consistency levels cannot be used on instances that are RECOVERING and several transactions happen while the instance is in the RECOVERING phase. Other AdminAPI commands result in the same error for the same scenario whenever at least one member of the cluster is RECOVERING. For example, dba.getCluster().

This has been fixed by ensuring that all internal sessions used by the AdminAPI use the consistency level of EVENTUAL regardless of the cluster’s consistency level.

BUG#30501590 – REBOOTCLUSTER(), STATUS() ETC FAIL IF TARGET IS AUTO-REJOINING

When automatic rejoin was enabled, if a target instance was rejoining the cluster, operations such as dba.rebootClusterFromCompleteOutage(), or Cluster.status() would fail.

This has been fixed by considering automatic rejoin as an instance state instead of a check that always aborts the operation, allowing the usage of <Cluster>.status() even when there are instances auto rejoining a cluster. On top of that, dba.rebootClusterFromCompleteOutage() can now detect if there are instances auto rejoining and override it so the command can properly reboot the cluster.

Bugs fixed with the new Metadata Schema Version

The new Metadata Schema allowed fixing some existing issues:

BUG#29507913 – INNODB CLUSTER / SHELL / METADATA HOSTNAME LIMITED TO LESS THAN 255 CHARACTERS

The InnoDB cluster metadata now supports hostnames up to 265 characters long, where 255 characters can be the host part and the remaining characters can be the port number.

BUG#28541069 / BUG#92128 – DEFINER DOES NOT EXIST WHEN ISSUING THE COMMAND DBA.GETCLUSTER()

If the clusterAdmin name was changed once a cluster was created, an error could be issued indicating “definer does not exists”. This was because the clusterAdmin user was used as the DEFINER of the view required by InnoDB clusters’s metatadata.

This has been fixed with the new Metadata Schema introduced in this release.

BUG#28531271 – CREATECLUSTER FAILS WHEN INNODB_DEFAULT_ROW_FORMAT IS COMPACT OR REDUNDANT

dba.createCluster() could fail if the instance had been started with innodb_default_row_format=COMPACT or innodb_default_row_format=REDUNDANT. This was because no ROW_FORMAT was specified on the InnoDB cluster metadata tables, which caused them to use the one defined in innodb_default_row_format.

The metadata schema has been updated to use ROW_FORMAT = DYNAMIC.

BUG#29137199 / BUG#91972 – GROUP REPLICATION PREVENTS THE CREATION OF MULTI-PRIMARY CLUSTER

It was not possible to create a multi-primary cluster due to cascading constraints on the InnoDB cluster metadata tables.

This has been fixed with the new Metadata Schema version introduced in this release that removes the usage of cascading constraints and other limitations.

Try it now and send us your feedback

MySQL Shell 8.0.19 GA is available for download from the following links:

- MySQL Community Downloads website: https://dev.mysql.com/downloads/shell/

- MySQL Shell is also available on GitHub: https://github.com/mysql/mysql-shell

And as always, we are eager to listen to the community feedback! So please let us know your thoughts here in the comments, via a bug report, or a support ticket.

You can also reach us at #shell and #mysql_innodb_cluster in Slack: https://mysqlcommunity.slack.com/

The documentation of MySQL Shell can be found in https://dev.mysql.com/doc/mysql-shell/8.0/en/ and the official documentation of InnoDB cluster can be found in the MySQL InnoDB Cluster User Guide.

The full list of changes and bug fixes can be found in the 8.0.19 Shell Release Notes.

Enjoy, and Thank you for using MySQL!