As you probably already know, in MySQL 5.7.3 release, InnoDB Memcached reached a record of over 1 million QPS on read only workload. The overview of the benchmark and testing results can be seen in an earlier blog by Dimitri. In this blog, I will spend sometime on the detail changes we have made to achieve this record number.

First thanks to Facebook’s Yoshinori with his bug#70172 that brought our attention to this single commit read only load test. We have been focussing on operation with large batch size. This bug prompted us to do a series of optimization on single commit read only queries and these optimizations eliminated almost all major bottlenecks from the InnoDB Memcached plugin itself.

If you are just getting familiar with InnoDB Memcached, there are some earlier blog on the feature to get you started. In a short word, InnoDB Memcached allows a fast path to retrieve key value data stored in the InnoDB table, with Memcached protocol.

The Benchmark:

Now, Let’s discuss the testing scenario. The InnoDB Memcached plugin configurations were all default in this benchmark, which means, the daemon_memcached_r_batch_size was also set to be 1, and the read operation would do a begin and commit transaction for each query. It is equivalent to auto-commit single selects through SQL interface. The innodb_api_trx_level is by default set to 0 (read uncommitted), however, changing it to 2 (repeatable read) gave the same benchmark result.

Another good news in 5.7.3 is that we start to support integer key column mapping, as it is common using integer as primary key for a table. And the table used in this benchmark comes with integer as the key column. The mapping table contains only key and value columns. So we set the corresponding flags, cas_column and expire_time_column column in the config containers table all to NULL, this avoids all the Memcached “extra” options. The table itself containers 1 million rows, each with a short integer key and a text value.

Here is the detail table definition

mysql> desc test.memc_test;

| Field | Type | Null | Key | Default | Extra |

| id | int(11) | NO | PRI | NULL | |

| value3 | text | YES | NULL |

To make InnoDB Memcached recognize this InnoDB table, we insert following row into memcached configure table – “innodb_memcache/containers”

INSERT INTO containers VALUES

(“memc1”, “test”, “memc_test”, “id”, “value3”, null, null, null, “PRIMARY”);

The memcached client for inserting rows and querying is a simple libmemcached program provided by Yoshinori. It does a single key look up and fetches corresponding value from the InnoDB table in each query.

We made some adjustment so that there are multiple client processes, each with multiple sessions. This was used to alleviate bottlenecks in the client itself.

As a note, there are many memcached clients out there, and Memcached clients can play important roles in the performance result itself. For example, we observed at least 3 times difference on result with Perl client Cache::Memcached::Fast when comparing to its slower version Cache::Memcached. And as far as we can see, libmemcached is one of the most efficient clients available, even though eventually it becomes bottleneck itself as the test progresses, especially requests through the network.

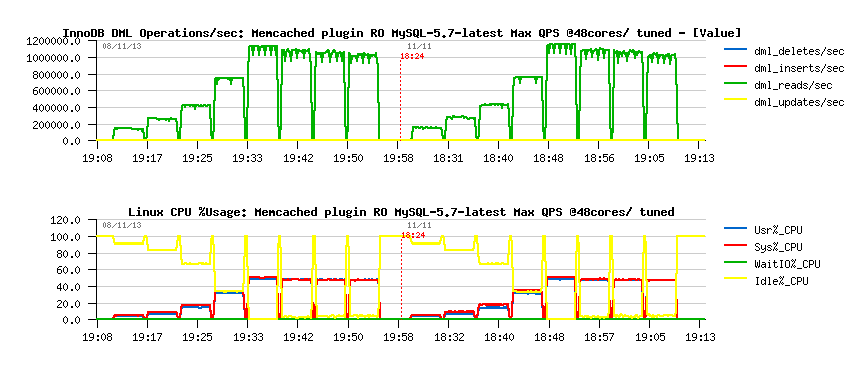

The test result can be seen in Dimitri’s blog, so I will not repeat them here. The summary is that we got close to 1.2 million QPS at the best. The next bottleneck now seems lying at the adaptive hash index’s global latch – “btr_search_latch”. The libmemcached client overhead is also significant.

Read Only QPS through InnoDB Memcached

The improvement:

There are several changes in both InnoDB Memcached code and Memcached Native code to achieve the record benchmarks.

1. The first is of course to address the issue brought by bug #70712. With daemon_memcached_r_batch_size set to 1, the transaction is being repeatedly started and committed for each query. It is better to cache the trx object itself, to avoid repeated create and destroy the trx object. Otherwise, the “trx_sys mutex” will kill the concurrency.

After the change, the trx object is cached with private memcached connection data. Each connection gets its own trx object, and it is used to handle transactions through this particular connection.

2. The next thing we did is to take advantage of the read only optimization recently made in the InnoDB code. This scenario (single read trx) is perfect to use the optimization. Whenever the read batch size is set to 1, InnoDB Memcached will treat incoming queries as auto-commit read only query. It will automatically hook up to the “fast path” of read-only operation in InnoDB.

3. After these two transaction related changes, we found the bottleneck comes from Memcached native Code itself. As a note, we embedded the Memcached code itself in our InnoDB Memcached plugin, so any bottleneck in Memcached will affect us.

The original Memcached memory allocation is protected by a global Cache Lock (engine->cache_lock), and it quickly rises in prominence in the profiling result.

Even though the data is stored in InnoDB, we happened to still use some of Memcached’s own memory allocation to store and deliver the result back to the front end. To fix this bottleneck, we stopped using Memcached Memory altogether. Instead a connection private memory buffer is used to store and deliver the result. This also saves a memcpy as we move the data to memcached memory as before.

This change makes InnoDB Memcached plugin as thin as possible, and only relies on the InnoDB buffer pool and Adaptive Hash Index (AHI) as the backing store for the data. This provides better scaling and memory handling than Memcached itself.

4. Another bottleneck in Memcached is its statistics mutex (“thread_stats->mutex”). This also becomes significant as testing goes. So to remove it, we switched to using atomic operations whenever the platform supports (most modern platforms do). With these changes, we can now well scale the plugin to over 100 connections without degradation as the number of connections are ramped up.

5. In addition to removing those major bottlenecks, we also streamline the code to remove some overhead work. For example, we start to cached the “search tuple”, so that there is no need to allocate the search tuple for each query. This is to keep the InnoDB Memcached as lean as possible.

With these changes, we have eliminated all the major InnoDB Memcached Plugin bottlenecks. The bottlenecks now comes from clients themselves and to a lesser degree from the Adaptive Hash Index search latch.

Future work:

Now the Memcached read goes more than twice as fast as those from SQL end. By using the InnoDB buffer pool as the in-memory store, and with InnoDB AHI, InnoDB Memcached can probably provide an efficient and more scalable store than Memcached itself.

There is still more to be done.

1. We will continue to remove some bottlenecks in InnoDB (such as btr_search_latch), as well as make InnoDB memcached leaner/faster.

2. We will add support to “mgets” command, which allows Memcached to fetch multiple results (corresponding to multiple keys) in one query attempts. This would again give us another big jump in terms of QPS.

3. We will start to focus more on insertion/updates operations.

4. We are considering extending the functionality of the memcached interface to support range queries etc. So to make it a more versatile key value store.

In summary, with these enhancements, the InnoDB Memcached becomes more and more attractive as as quick key value store through the MySQL server.

Your feedback and comments are important to us as we evolve and improve this plugin.