The MySQL Group Replication (GR) feature is a multi-primary update anywhere or single-primary replication plugin for MySQL with built-in conflict detection and resolution, automatic distributed recovery, and group membership.

Its goal it is to make high-availability (HA) simple with off-the-shelf hardware, not only to new applications but also existing ones – that was one of the motivations of the single-primary mode.

One of the big implications of a distributed system is the consistency guarantees that it provides. In terms of distributed consistency, either in normal or failure repair operations, GR has always been an eventual consistency system.

We can split the events that dictate the consistency in two categories:

- control operations, either manual or automatically triggered by failures;

- data flow.

Control Operations

In the list of operations in Group Replications that can be evaluated in terms of consistency we have: primary failover, member join/leave and network failures. Member join/leave is already covered by distributed recovery and write protection described here and here. Network failures are likewise covered by the fencing modes. Which leaves us the primary failover, which since 8.0.13 can also be an operation triggered by the DBA.

When a secondary is promoted to primary, either 1) it can be made available to application traffic immediately, regardless of how large the replication backlog is, or 2) access to it can be restricted until the backlog is dealt with.

With the first alternative, the system will take the minimum time possible to secure a stable group membership after the primary failure by electing a new primary and then allowing data access immediately while it is still applying possible backlog from the old primary. Writes consistency is ensured (as we will see later), but reads can temporarily appear to go back in time.

Example E1: If client C1 wrote “A=2 WHERE A=1” on the old primary just before its failure, when client C1 is reconnected to the new primary it may read “A=1” until the new primary applies its backlog and catches up with the state of the old primary before it left the group.

With the second alternative, the system will secure a stable group membership after the primary failure and will elect a new primary in the same way as the first alternative, but in this case the group will wait until the new primary applies all backlog and only then will allow data access.

This will ensure that in a situation as described in Example E1, when client C1 is reconnected to the new primary it will read “A=2“. The trade-off is that the time required to failover will be proportional to the backlog size, which on a balanced group should be small.

Until 8.0.14 there was no way to choose which should be the failover policy, by default availability was maximized. Please see how to make the choice on 8.0.14 and later on the post Preventing stale reads on primary fail-over!.

Data flow

The way which data flow can impact group consistency is based on reads and writes, especially when distributed across all members.

This category applies to both modes: single and multi primary, to make the text simpler I will restrict it to single-primary mode.

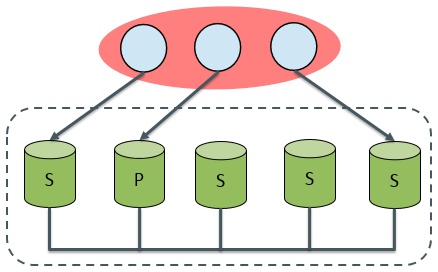

The usual read/write splitting of the incoming transactions into a single-primary group is to route writes to the primary and evenly distribute reads to the secondaries.

Since the group should behave as a single entity, it is reasonable to expect that writes on the primary are instantaneously available on the secondaries. Even though Group Replication is written on top of Group Communication System (GCS) protocols that implement the Paxos algorithm, some parts of GR are asynchronous which implies that data is asynchronously applied to secondaries, so a client C2 can write “B=2 WHERE B=1″ on the primary, immediately connect to a secondary and read “B=1“.

Until 8.0.14 this was the only consistency level available, but before presenting how you can now choose the consistency level, lets first understand the alternatives.

It is important to distinguish the possible synchronization points because we can synchronize data when we read or when we write.

If we synchronize when we read, the current client session will wait until a given point, which is the point in time that all preceding update transactions are done, before it can start executing. With this approach, only this session is affected, all other concurrent data operations are not affected.

If we synchronize when we write, the writing session will wait until all secondaries have written their data. Since GR follows a total order on writes this implies waiting for this and all preceding writes that may be on secondaries’ queues to be applied. So when using this synchronization point, the writing session waits for all secondaries queues to be applied.

Any alternative will ensure that on the above example, client C2 would always read “B=2” even if immediately connected to a secondary.

Each alternative has its advantages and drawbacks, which are directly related to your system workload.

Lets see some examples to see which alternative is more appropriated:

Synchronize on Writes

I want to load balance my reads without deploying additional restrictions on which server I read from to avoid reading stale data, my group writes are much less than my group reads.

I have a group that has a predominantly read-only data, I want my read-write transactions to be applied everywhere once they commit, so that subsequent reads are done on up-to-date data that includes my latest write. Thence I do not pay the synchronization on every RO transaction, but only on RW ones.

In these two cases, the chosen synchronization point should be on write.

Synchronize on Reads

I want to load balance my reads without deploying additional restrictions on which server I read from to avoid reading stale data, my group writes are much more than my group reads.

I want specific transactions in my workload to always read up-to-date data from the group, so that whenever that sensitive data is updated (such as credentials for a file

or similar data) I will enforce that reads shall read the most up to date value.

In these two cases, the chosen synchronization point should be on read.

These two synchronization points, plus a combination of both, can now be chosen as Group Replication consistency level, the post Consistent Reads explains how you can use them.

Conclusion

I hope that this introduction to Group Replication Consistency Levels helps you understand how to take most advantage of them, so that you focus on writing applications and not so much in the HA properties of your system!