I’ve previously provided an example of using MySQL Cluster Manager to add nodes to a running MySQL Cluster deployment but I’ve since received a number of questions around how to do this in more complex circumstances (for example when ending up with more than 1 MySQL Server on a single host where each mysqld process should use a different port). The purpose of this post is to work through one of these more complex scenarios.

I’ve previously provided an example of using MySQL Cluster Manager to add nodes to a running MySQL Cluster deployment but I’ve since received a number of questions around how to do this in more complex circumstances (for example when ending up with more than 1 MySQL Server on a single host where each mysqld process should use a different port). The purpose of this post is to work through one of these more complex scenarios.

The starting point is an existing cluster made up of 3 hosts with the nodes (processes) as described in this MCM report:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

mcm> SHOW STATUS -r mycluster; +--------+----------+-------------------------------+---------+-----------+---------+ | NodeId | Process | Host | Status | Nodegroup | Package | +--------+----------+-------------------------------+---------+-----------+---------+ | 1 | ndbmtd | paas-23-54.osc.uk.oracle.com | running | 0 | 7_2_5 | | 2 | ndbmtd | paas-23-55.osc.uk.oracle.com | running | 0 | 7_2_5 | | 49 | ndb_mgmd | paas-23-56.osc.uk.oracle.com | running | | 7_2_5 | | 50 | mysqld | paas-23-54.osc.uk.oracle.com | running | | 7_2_5 | | 51 | mysqld | paas-23-55.osc.uk.oracle.com | running | | 7_2_5 | | 52 | mysqld | paas-23-56.osc.uk.oracle.com | running | | 7_2_5 | | 53 | ndbapi | *paas-23-56.osc.uk.oracle.com | added | | | +--------+----------+-------------------------------+---------+-----------+---------+ 7 rows in set (0.01 sec) |

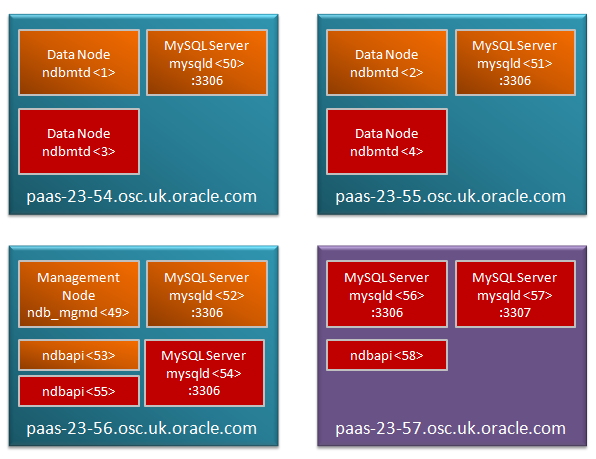

This same configuration is shown graphically in this diagram:

Note that the ‘ndbapi’ node isn’t actually a process but is instead a ‘slot’ that can be used by any NDB API client to access the data in the data nodes directly – this could be any of:

- A MySQL Server

- An application using the C++ NDB API directly

- A Memcached server using the direct NDB driver

- An application using the ClusterJ, JPA or modndb REST API

- The MySQL database restore command

This Cluster is now going to be extended by adding an extra host as well as extra nodes (both processes and ndbapi slots).

The following diagram illustrates what the final Cluster will look like:

The first step is to add the new host to the configuration and make it aware of the MySQL Cluster package being used (in this example, 7.2.5). Note that you should already have started the mcmd process on this new host (if not then do that now):

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

mcm> ADD HOSTS --hosts=paas-23-57.osc.uk.oracle.com mysite; +--------------------------+ | Command result | +--------------------------+ | Hosts added successfully | +--------------------------+ 1 row in set (8.04 sec) mcm> ADD PACKAGE -h paas-23-57.osc.uk.oracle.com --basedir=/home/oracle/cluster_7_2_5 7_2_5; +----------------------------+ | Command result | +----------------------------+ | Package added successfully | +----------------------------+ 1 row in set (0.68 sec) |

At this point the MCM agent on the new host is connected with the existing 3 but it has not become part of the Cluster – this is done by declaring which nodes should be on that host; at the same time I add some extra nodes to the existing hosts. As there will be more than one MySQL server (mysqld) running on some of the hosts, I’ll explicitly tell MCM what port number to use for some of the mysqlds (rather than just using the default of 3306).

|

1

2

3

4

5

|

mcm> ADD PROCESS -R ndbmtd@paas-23-54.osc.uk.oracle.com, ndbmtd@paas-23-55.osc.uk.oracle.com,mysqld@paas-23-56.osc.uk.oracle.com, ndbapi@paas-23-56.osc.uk.oracle.com,mysqld@paas-23-57.osc.uk.oracle.com, mysqld@paas-23-57.osc.uk.oracle.com,ndbapi@paas-23-57.osc.uk.oracle.com -s port:mysqld:54=3307,port:mysqld:57=3307 mycluster; |

|

1

2

3

4

5

6

|

+----------------------------+ | Command result | +----------------------------+ | Process added successfully | +----------------------------+ 1 row in set (2 min 34.22 sec) |

In case you’re wondering how I was able to predict the node-ids that would be allocated to the new nodes, the scheme is very simple:

- Node-ids 1-48 are reserved for data nodes

- Node-ids 49-256 are used for all other node types

- Within those ranges, node-ids are allocated sequentially

If you look carefully at the results you’ll notice that the ADD PROCESS command took a while to run (2.5 minutes) – the reason for this is that behind the scenes, MCM performed a rolling restart – ensuring that all of the existing nodes pick up the new configuration without losing database service. Before starting the new processes, it makes sense to double check that the correct ports are allocated to each of the mysqlds:

|

1

2

3

4

5

6

7

8

9

10

11

12

|

mcm> GET -d port:mysqld mycluster; +------+-------+----------+---------+----------+---------+---------+---------+ | Name | Value | Process1 | NodeId1 | Process2 | NodeId2 | Level | Comment | +------+-------+----------+---------+----------+---------+---------+---------+ | port | 3306 | mysqld | 50 | | | Default | | | port | 3306 | mysqld | 51 | | | Default | | | port | 3306 | mysqld | 52 | | | Default | | | port | 3307 | mysqld | 54 | | | | | | port | 3306 | mysqld | 56 | | | Default | | | port | 3307 | mysqld | 57 | | | | | +------+-------+----------+---------+----------+---------+---------+---------+ 6 rows in set (0.07 sec) |

At this point the new processes can be started and then the status of all of the processes confirmed:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

mcm> START PROCESS --added mycluster; +------------------------------+ | Command result | +------------------------------+ | Process started successfully | +------------------------------+ 1 row in set (26.30 sec) mcm> SHOW STATUS -r mycluster; +--------+----------+-------------------------------+---------+-----------+---------+ | NodeId | Process | Host | Status | Nodegroup | Package | +--------+----------+-------------------------------+---------+-----------+---------+ | 1 | ndbmtd | paas-23-54.osc.uk.oracle.com | running | 0 | 7_2_5 | | 2 | ndbmtd | paas-23-55.osc.uk.oracle.com | running | 0 | 7_2_5 | | 49 | ndb_mgmd | paas-23-56.osc.uk.oracle.com | running | | 7_2_5 | | 50 | mysqld | paas-23-54.osc.uk.oracle.com | running | | 7_2_5 | | 51 | mysqld | paas-23-55.osc.uk.oracle.com | running | | 7_2_5 | | 52 | mysqld | paas-23-56.osc.uk.oracle.com | running | | 7_2_5 | | 53 | ndbapi | *paas-23-56.osc.uk.oracle.com | added | | | | 3 | ndbmtd | paas-23-54.osc.uk.oracle.com | running | 1 | 7_2_5 | | 4 | ndbmtd | paas-23-55.osc.uk.oracle.com | running | 1 | 7_2_5 | | 54 | mysqld | paas-23-56.osc.uk.oracle.com | running | | 7_2_5 | | 55 | ndbapi | *paas-23-56.osc.uk.oracle.com | added | | | | 56 | mysqld | paas-23-57.osc.uk.oracle.com | running | | 7_2_5 | | 57 | mysqld | paas-23-57.osc.uk.oracle.com | running | | 7_2_5 | | 58 | ndbapi | *paas-23-57.osc.uk.oracle.com | added | | | +--------+----------+-------------------------------+---------+-----------+---------+ 14 rows in set (0.08 sec) |

The enlarged Cluster is now up and running but any existing MySQL Cluster tables will only be stored across the original data nodes. To remedy that, each of those existing tables should be repartitioned:

|

1

2

3

4

5

6

7

8

9

10

11

|

mysql> ALTER ONLINE TABLE simples REORGANIZE PARTITION; Query OK, 0 rows affected (0.22 sec) Records: 0 Duplicates: 0 Warnings: 0 mysql> OPTIMIZE TABLE simples; +-------------------+----------+----------+----------+ | Table | Op | Msg_type | Msg_text | +-------------------+----------+----------+----------+ | clusterdb.simples | optimize | status | OK | +-------------------+----------+----------+----------+ 1 row in set (0.00 sec) |

You can safely perform the repartitioning while the Cluster is up and running (with your application sending in reads and writes) but there is a performance impact (has been measured at 50%) and so you probably want to do this at a reasonably quiet time of day.

As always, please post feedback and questions in the comments section of this post.