MySQL Cluster is designed to be a High Availability, Fault Tolerant database where no single failure results in any loss of service.

This is however dependent on how the user chooses to architect the configuration – in terms of which nodes are placed on which physical hosts, and which physical resources each physical host is dependent on (for example if the two blades containing the data nodes making up a particular node group are cooled by the same fan then the failure of that fan could result in the loss of the whole database).

Of course, there’s always the possibility of an entire data center being lost due to earthquake, sabotage etc. and so for a fully available system, you should consider using asynchronous replication to a geographically remote Cluster.

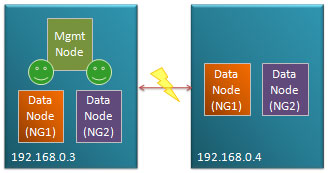

Fig 1. illustrates a typical small configuration with one or more data nodes from different node groups being stored on two different physical hosts and a management node on an independent machines (probably co-located with other applications as its resource requirements are minimal. If any single node (process) or physical host is lost then service can continue.

The basics of MySQL Cluster fault tolerance

Data held within MySQL Cluster is partitioned, with each node group being responsible for 2 or more fragments. All of the data nodes responsible for the same fragments form a Node Group (NG). If configured correctly, any single data node can be lost and the other data nodes within its node group will continue to provide service.

The management node (ndb_mgmd process) is required when adding nodes to the cluster – either when it was initially configured or when a node has been lost and restarted.

A heart-beat protocol is used between the data nodes in order to identify when a node has been lost. In many cases, the community of surviving data nodes can reconfigure themselves but in some cases they need help from the management node – much of this article focuses on how to identify these cases so that you can decide what level of redundancy is required for the management node.

Goals of the algorithm

The algorithm used by MySQL Cluster has 2 priorities (in order):

- Prevent database inconsistencies through “split brain” syndrome

- Keep the database up and running, serving the application

Split brain would occur if 2 data nodes within a node group lost contact with each other and independently decided that they should be master for the fragments controlled by their node group. This could lead to them independently applying conflicting changes to the data – making it very hard to recover the database (which would include undoing the changes that the application believes to have been safely committed). Note that a particular node doesn’t know whether its peer(s) has crashed or if it has just lost its connection to it. If the algorithm is not confident of avoiding a split brain situation then all of the data nodes are shut down – obviously that isn’t an ideal result and so it’s important to understand how to configure your cluster so that doesn’t happen.

The algorithm

If all of the data nodes making up a node group are lost then the cluster shuts down.

When data nodes lose contact with each other (could be failure of a network connection, process or host) then all of the data nodes that can still contact each other form a new community. Each community must decide whether its data nodes should stay up or shut down:

- If the community doesn’t contain at least 1 data node from each node group then it is not viable and its data nodes should shut down.

- If this community is viable and it can determine that it contains enough of the data nodes such that there can be no other viable community out there (one with at least 1 data node from each node group) then it will decide (by itself) to keep up all of its data nodes.

- If the community is viable but there is the possibility of another viable community then it contacts the arbitrator which decides which amongst all viable communities should be allowed to stay up. If the community can not connect to the arbitrator then its data nodes shut down.

In this way, at most one community of data nodes will survive and there is no chance of split brain.

The arbitrator will typically run on a management node. As you’ll from the algorithm and the following examples, the cluster can sometimes survive a failure without needing a running management node but sometimes it can’t. In most of the examples, a single management node is used but you may well decide to have a second for redundacy so that more multiple-point-of-failures can be handled. At any point in time, just one of the management nodes would act as the active arbitrator, if the active one is lost then a majority community of data nodes can hand control over to the other management node.

Note that the management node consumes very little resource and so can be co-located with other functions/applications but as you’ll see from the examples, you would normally avoid running it on the same host as a data node.

Example 1: Simplest cluster – loss of management node followed by a data node

Fig 2. shows a very simple cluster with 3 hosts, the management node running on 192.168.0.19 and then a single node group (NG1) made up of 2 data nodes split between 192.168.0.3 and 192.168.0.4. In this scenario, the management node is ‘lost’ (could be process, host or network failure) followed by one of the data nodes.

The surviving data node forms a community of 1. As it can’t know whether the other data node from NG1 is still viable and it can’t contact the arbitrator (the management node) it must shut itself down and so service is lost.

Note that the 2 nodes could be lost instantaneously or the management node might be lost first followed some time later by a data node.

To provide a truly HA solution there are 2 steps:

- Ensure that there is no single point of failure that could result in 192.168.0.19 and either of the other 2 hosts being lost.

- Run a second management node on a 4th host that can take over as arbitrator if 192.168.0.19 is lost

Example 2: Half of data nodes isolated but management node available

In Fig 3. host 192.168.0.3 and its 2 data nodes remains up and running but becomes isolated from the management node and the other data nodes. 2 communities of connected data nodes are formed. As each of these communities are viable but recognize that there could be another viable surviving communitycontain a data node from each node group, they must defer to the management node. As192.168.0.3 has lost it’s connection to the management node, the community of data nodes hosted there shut themselves down. The community hosted on 192.168.0.4 can contact the management node which as it’s the only community it can see, allows its data nodes to stay up and so service is maintained.

.

.

.

Example 3: Half of data nodes isolated and management node lost

The scenario shown in Fig 4. builds upon Example 2 but in the case, the management node is lost before one of the data node hosts loses its connection to the other.

In this case, both communities defer to the management node but as that has been lost they both shut themselves down and service is lost.

Refer to Example 1 to see what steps could be taken to increase the tolerance to multiple failures.

.

.

.

.

.

Example 4: Management node co-located with data nodes

Fig. 4 shows a common, apparent short-cut that people may take, with just 2 hosts available hosting the management node of the same machine as some of the data nodes. In this example, the connection between the 2 hosts is lost. As each community is viable they each attempt to contact the arbitrator – the data nodes on 192.168.0.3 are allowed to stay up while those on 192.168.0.4 shut down as they can’t contact the management node.

However this configuration is inherently unsafe, if 192.168.0.3 failed then there would be a complete loss of service as the data nodes on 192.168.0.4 would form a viable community but be unable to confirm that they represent the only viable community.

It would be tempting to make this more robust by running a second management node on 192.168.0.4 – in that case, when each host becomes isolated from the other, the data nodes local to the management node that’s the current arbitrator will stay up however if the entire host on which the active arbitrator failed then you would again lose service. The management node must be run on a 3rd host for a fault-tolerant solution.

Example 5: Odd number of data node hosts

Fig 6. shows a configuration running the management node on the same host as some of the data nodes does provide a robust solution.

In this example 192.168.0.3 becomes isolated its data nodes form a community of 2 which doesn’t include a data node from NG2 and so they shut themselves down. 192.168.0.4 and 192.168.0.19 are still connected and so they form a commiunity of 4 data nodes; they recognize that the community holds all data nodes from NG2 and so there can be no other viable community and so they are kept up without having to defer to the arbitrator.

Note that as there was no need to consult the management node, service would be maintained even if it was the machine hosting the management node that became isolated.

.

.

Example 6: Loss of all data nodes in a node group

Fig 7. illustrates the case where there are multiple data node failures such that all of the data nodes making up a node group are lost. In this scenario, the cluster has to shut down as that node group is no longer viable and the cluster would no longer be able to provide access to all data.

.

.

.

.

.

.

Example 7: Loss of multiple data nodes from different node groups

Fig 8. is similar to Example 6 but in this case, there is still a surviving data node from each node group. The surviving data node from NG1 forms a community with the one from NG2. As there could be another viable community (containing a data node from NG1 and NG2), they defer to the management node and as they form the only viable community they’re allowed to stay up.

.

.

.

.

.

.

Example 8: Classic, fully robust configuration

Fig 9. shows the classic, robust configuration. 2 independent machines both host management nodes. These are in turn connected by 2 independent networks to each of the data nodes (which are in turn all connected to each other via duplicated network connections).