UPDATE: This post refers to the first labs release of InnoDB cluster. For the updated version for the latest release, please see the new Tutorial blog post

Traditionally, setting up high availability (HA) in MySQL has been a challenging task, especially for people without advanced knowledge of MySQL. From understanding concepts and technologies, to the tooling, specific commands and files to execute and edit, there’s a lot of things you need to know even when planning a test deployment (the Quick Start Guide for Group Replication should give you an idea). So many people end up procrastinating setting up HA until disaster strikes and downtime happens.

The MySQL team at Oracle has now released a preview of a solution that aims to make it easy to combine multiple instances of MySQL together in order to provide redundancy for High Availability of the MySQL databases used by your applications.

In this tutorial, we will use the new MySQL Shell to create 3 sandbox instances, create a 3 member Group Replication cluster with them and setup MySQL Router as a proxy to hide the multiple MySQL instances behind a single TCP port. Group Replication provides synchronous replication for InnoDB and built-in automatic failover, while the Router lets applications connect to an appropriate member of the cluster with no changes to their code, other than an update to the MySQL connection parameters.

Downloading and Installing Required Packages

To get started, download and install the MySQL InnoDB cluster package for your system from labs.mysql.com, which will contain:

- MySQL Server 5.7.15 labs with the Group Replication plugin

- MySQL Router 2.1.0 labs

- MySQL Shell 1.0.5 labs with AdminAPI and mysqlprovision

The tar package must be extracted and you can then install the individual packages for each component, through usual platform specific methods.

Deploying Sandbox Instances

The first step is to deploy sandbox MySQL Server instances, so that we can play around and experiment safely, without affecting any existing MySQL databases.

Start MySQL Shell (as your ordinary, non-root OS user):

|

1 |

$ mysqlsh |

And then execute:

|

1

2

|

mysql-js> dba.deployLocalInstance(3310) |

The argument to deployLocalInstance() is the TCP port number where MySQL Server should listen for connections. By default, the sandbox is created in a directory named $HOME/mysql-sandboxes/<port>.

You will be prompted to pick a MySQL root account password for that instance, this is the password that you will use to connect to that instance in the future.

Note: use the same password for all your sandboxes in the same cluster.

Repeat the above command two more times, using different port numbers each time. This will allow us to create an InnoDB cluster that is tolerant to up to one failure.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

|

kojima@VirtualBox:~$ mysqlsh Welcome to MySQL Shell 1.0.5-labs Development Preview Copyright (c) 2016, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type '\help', '\h' or '\?' for help, type '\quit' or '\q' to exit. Currently in JavaScript mode. Use \sql to switch to SQL mode and execute queries. mysql-js> dba.deployLocalInstance(3310) A new MySQL sandbox instance will be created on this host in /home/kojima/mysql-sandboxes/3310 Please enter a MySQL root password for the new instance: Deploying new MySQL instance... Instance localhost:3310 successfully deployed and started. Use '\connect root@localhost:3310' to connect to the instance. mysql-js> dba.deployLocalInstance(3320) A new MySQL sandbox instance will be created on this host in /home/kojima/mysql-sandboxes/3320 Please enter a MySQL root password for the new instance: Deploying new MySQL instance... Instance localhost:3320 successfully deployed and started. Use '\connect root@localhost:3320' to connect to the instance. mysql-js> dba.deployLocalInstance(3330) A new MySQL sandbox instance will be created on this host in /home/kojima/mysql-sandboxes/3330 Please enter a MySQL root password for the new instance: Deploying new MySQL instance... Instance localhost:3330 successfully deployed and started. Use '\connect root@localhost:3330' to connect to the instance. |

Initializing the InnoDB Cluster

Next, we connect the shell to one of the instances we just created, which will be the seed instance. The seed instance is the one that would hold the initial state of the database, which will be replicated to the other instances as they’re added to the cluster. In this case, we’re working with empty sandbox instances, so any of them will do.

|

1

2

3

4

5

|

mysql-js> \c root@localhost:3310 Creating a Session to 'root@localhost:3310' Enter password: Classic Session successfully established. No default schema selected. |

Next, create the InnoDB cluster:

|

1

2

3

4

5

6

7

8

9

10

|

mysql-js> cluster = dba.createCluster('mycluster') A new InnoDB cluster will be created on instance 'root@localhost:3310'. ... Please specify an administrative MASTER key for the cluster 'mycluster': Creating InnoDB cluster 'mycluster' on 'root@localhost:3310'... Adding Seed Instance... Cluster successfully created. Use Cluster.addInstance() to add MySQL instances. ... |

The createCluster() command takes one parameter, which is a symbolic name you give to this InnoDB cluster. This will, among other things:

- Deploy the metadata schema in that instance (

mysql.mysql_innodb_cluster_metadata) - Verify that its configuration is correct for Group Replication, making changes if necessary

- Register it as the seed instance of the new cluster

- Create necessary internal administrative accounts

- Start Group Replication

You will prompted for a master administrative key. This key is needed to manage the InnoDB cluster and you will also need it later to bootstrap MySQL Router instances for use with the cluster. This allows a secure by default deployment without sacrificing ease-of-use. The system will create and manage single use accounts with minimal privileges internally for you.

Sandbox instances are pre-configured to work with replication, but if you use a pre-existing instance, it’s possible that some configuration options might not be set correctly. The command ensures that the settings are correct and if not, it reconfigures the server. If some change requires the MySQL Server to be restarted, you must do it manually whenever is convenient.

Add Instances to InnoDB Cluster

Now, you need to add replicas to the InnoDB cluster. We use the blank sandbox instances that we created earlier. Usually, whenever a new instance is added to a replica set in a cluster, they will be completely empty and need to catch up to the current state of the seed instance. If the amount of pre-existing data in the seed instance is very large, you may want to clone it or copy that data through a fast method beforehand. The MySQL Shell will soon also provide a mechanism to perform that step with minimal effort. Otherwise, Group Replication will perform a sync automatically, re-executing all transactions from the seed, as long as they’re in the MySQL binary log. Since the seed instance in this example has little to no data (ie. just the metadata schema and internal accounts) and have binary logging enabled from the beginning, there’s very little that new replicas need to catch up with. Any transactions that were executed in the seed instance will be re-executed in each added replica.

Add two blank instances to the InnoDB cluster:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

mysql-js> cluster.addInstance("root@localhost:3320") A new instance will be added to the InnoDB cluster. Depending on the amount of data on the cluster this might take from a few seconds to several hours. Please provide the password for 'root@localhost:3320': Adding instance to the cluster ... The instance 'root@localhost:3320' was successfully added to the cluster. mysql-js> cluster.addInstance("root@localhost:3330") A new instance will be added to the InnoDB cluster. Depending on the amount of data on the cluster this might take from a few seconds to several hours. Please provide the password for 'root@localhost:3330': Adding instance to the cluster ... The instance 'root@localhost:3330' was successfully added to the cluster. |

And now, we check the current status of the cluster:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

mysql-js> cluster.status() { "clusterName": "mycluster", "defaultReplicaSet": { "status": "Cluster tolerant to up to ONE failure.", "topology": { "localhost:3310": { "address": "localhost:3310", "status": "ONLINE", "role": "HA", "mode": "R/W", "leaves": { "localhost:3320": { "address": "localhost:3320", "status": "ONLINE", "role": "HA", "mode": "R/O", "leaves": {} }, "localhost:3330": { "address": "localhost:3330", "status": "ONLINE", "role": "HA", "mode": "R/O", "leaves": {} } } } } } } |

This command queries the current status of the InnoDB cluster and produces a short report. The status field for each instance should show either ONLINE or RECOVERING. RECOVERING means that the instance is receiving updates from the seed instance and should eventually switch to ONLINE.

Another point to note is that one of the instances (the PRIMARY) is marked R/W (read/writable), while the other two are marked R/O (read only). Only the instance marked R/W can execute transactions that update the database. If that instance becomes unreachable for any reason (like a system crash), one of the remaining two instances automatically takes over its place and becomes the new PRIMARY.

Deploy MySQL Router

In order for applications to handle failover, they need to be aware of the topology of the InnoDB cluster. They also need to know, at any time, which of the instances is the PRIMARY. While it’s possible for applications to implement that logic by themselves, MySQL Router can do that for you, with minimal work and no code changes in applications.

The recommended deployment of MySQL Router is on the same host as the application. In our tutorial, we’re running everything on the same host, so we’ll do that for MySQL Router too. You need the MASTER key of the InnoDB cluster to auto-configure MySQL Router.

Assuming MySQL Router is already installed, all we need to do is to bootstrap it with the metadata server, calling mysqlrouter with the following command line option from the system’s shell:

|

1

2

3

4

5

6

7

8

9

10

|

kojima@VirtualBox:~$ sudo mysqlrouter --bootstrap localhost:3310 Please enter the administrative MASTER key for the MySQL InnoDB cluster: MySQL Router has now been configured for the InnoDB cluster 'mycluster'. The following connection information can be used to connect to the cluster. Classic MySQL protocol connections to cluster 'mycluster': - Read/Write Connections: localhost:6446 - Read/Only Connections: localhost:6447 |

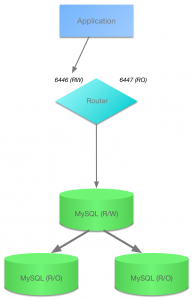

MySQL Router connects to the InnoDB cluster, fetches its metadata and configures itself for use. The generated configuration creates 2 TCP ports: one for read-write sessions (which redirect connections to the PRIMARY) and one for read-only sessions (which redirect connections to one of the SECONDARY instances, in a round-robin fashion).

If your application opens a very high number of database sessions overloading the PRIMARY instance and additionally does not need to perform updates, you can limit them to use read-only sessions and offload the PRIMARY.

Once bootstrapped and configured, start MySQL Router (or setup a service for it to start automatically when the system boots):

|

1

2

|

$ mysqlrouter & |

You can now connect a MySQL client to one of the incoming MySQL Router ports and see how the client gets transparently connected to one of the InnoDB cluster instances. To see which instance we are actually connected to, we simply query the ‘port’ status variable.

|

1

2

3

4

5

6

7

8

9

10

11

|

kojima@VirtualBox:~$ mysqlsh --uri root@localhost:6446 mysql-js> \sql Switching to SQL mode... Commands end with ; mysql-sql> select @@port; +--------+ | @@port | +--------+ | 3310 | +--------+ 1 row in set (0.00 sec) |

Here we connected to the read-write port (6446), so the Router connected us to the PRIMARY server, which is listening on port 3310. Now, try connecting to the read-only port (6447) and see what you get.

The \sql shell command switches the MySQL Shell from the default JavaScript mode to SQL mode, where you can directly execute SQL statements.

Checking Status of the InnoDB Cluster

To check the status of the InnoDB cluster at a later time, you can get a reference to the InnoDB cluster object by connecting to the cluster through the Router or directly to one of the instances. If you want to make changes to the cluster, you would need to connect to the read-write port of the Router.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

kojima@VirtualBox:~$ mysqlsh --uri root@localhost:6446 Creating a Session to 'root@localhost:6446' Enter password: ... mysql-js> cluster=dba.getCluster() When the InnoDB cluster was setup, a MASTER key was defined in order to enable performing administrative tasks on the cluster. Please specify the administrative MASTER key for the default cluster: <Cluster:mycluster> mysql-js> cluster.status() { "clusterName": "mycluster", ... |

Putting the InnoDB Cluster to the Test

Next we want to challenge the InnoDB cluster and test if the failover really works. We are going to kill the PRIMARY instance and see if one of the other instances takes over automatically. For this purpose we use the dba.killLocalInstance() function (in \js mode).

|

1

2

3

4

5

|

mysql-js> dba.killLocalInstance(3310) The MySQL sandbox instance on this host in /home/kojima/mysql-sandboxes/3310 will be killed Killing MySQL instance... Instance localhost:3310 successfully killed. |

After that we again check (from our test session to the MySQL Router) which instance we are now connected to. The first SELECT fails since the connection to the original PRIMARY was lost. MySQL Shell automatically reconnects for us and we simply execute the command again.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

mysql-js> \sql Switching to SQL mode... Commands end with ; mysql-sql> SELECT @@port; ERROR: 2013 (HY000): Lost connection to MySQL server during query The global session got disconnected. Attempting to reconnect to 'root@localhost:6446'... The global session was successfully reconnected. mysql-sql> SELECT @@port; +--------+ | @@port | +--------+ | 3330 | +--------+ 1 row in set (0.00 sec) mysql-sql> |

This shows that the InnoDB cluster provided us with automatic failover, that MySQL Router has automatically reconnected us to the new PRIMARY instance, and that we have high availability.

With this, you should be able to get started with MySQL InnoDB clusters and play with High Availability with minimal effort. This is still an early alpha/preview, many important features are still missing and definitely is not ready for production use. But it should give a hint of what’s cooking and let you play with Group Replication, MySQL Router and other HA and horizontal scalability related technologies that have been traditionally very hard until now.

If you find issue with the preview or have feature requests, please file a bug report at bugs.mysql.com If you’re not sure about what category to file under, use MySQL Server: Document Store: X Shell